How peer review enables better learning in online courses

By Katherine Ouellette

Online courses attract thousands of learners, but grading that many assignments often limits the format of assessments that instructors can assign, such as multiple choice questions. New research from MIT Open Learning explores a solution for evaluating open-ended assessments that also improves learners’ performance.

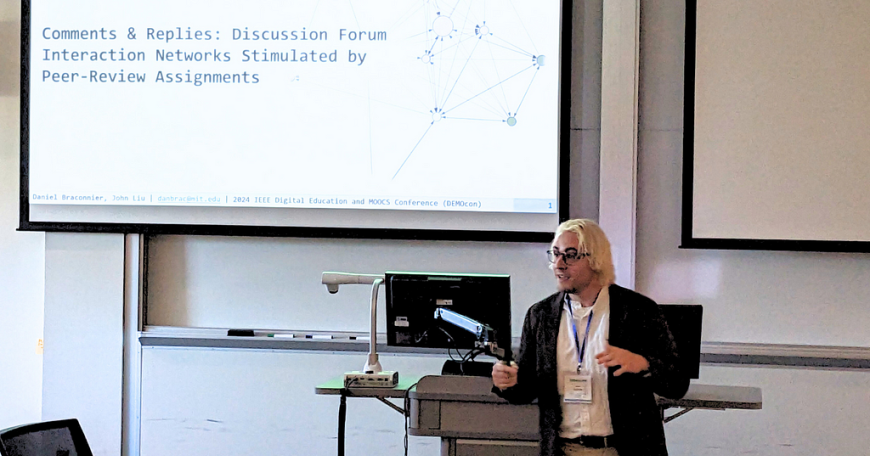

Daniel Braconnier, postdoc at the MIT Department of Mechanical Engineering and Open Learning’s Digital Learning Lab, and John Liu, digital learning scientist at Open Learning, presented at the 2024 IEEE Digital Education and Massive Open Online Courses Conference earlier this fall. Their work, “Comments & Replies: Discussion Forum Interaction Networks Stimulated by Peer-Review Assignments,” was a finalist for best paper. Braconnier and Liu studied whether online learners who provided more feedback to their peers enrolled in the same Open Learning course would perform better overall. By analyzing how learners interacted with each other on CrowdLearn, a peer assessment tool developed by FeedBackFruits, Braconnier and Liu determined that learners who made more connections received better grades.

Here, Braconnier explains how peer review enables complex questions and deeper learner engagement in online courses.

Q: Why did you start using the CrowdLearn peer assessment tool? What problem were you trying to solve?

A: We wanted to enable a more enriched open-ended assessment experience in MIT Open Learning’s online courses on MITx. Prior to the integration of CrowdLearn, prompts were commonly answered via multiple choice, short text responses, or a rigid open response peer-review system. Attempting prompts with longer text responses, such as project presentations or write-ups, would require serious overhead and attention by course staff to grade all submissions. The previous peer-review tool we used offered fewer ways for learners to interact with their peers’ work.

CrowdLearn provides a means for the learners to grade their peers’ work and chat with each other in a discussion forum-style interface, which was a new feature for our courses. Learners access the CrowdLearn tool through a portal in their online course. Involving the learners in the assessment process expands the number of people grading the open response projects, therefore taking the load off instructors and making it possible to assign more open-ended projects.

Q: How does the tool work?

A: Learners first submit their assignments as PDFs, which unlocks access to the answer key and the peer review phase. Each learner is required to grade and comment on the submissions of one to four randomly assigned peers.

After this prescribed interaction, any learner can leave comments, replies, and upvotes on any submission. The CrowdLearn platform visually organizes all of the assignments like posts on a discussion forum. The complete comment history, including the original peer review, is nested under dropdown buttons.

After open discussion, learners are asked to reflect on how this experience affected their learning of course content.

Q: How did you measure the efficacy of CrowdLearn?

A: Our aim was to use the user interaction data — such as comments, replies, upvotes, etc. — to define connections between learners. We hypothesized that if the tool increases interactions between learners, then the learners who make the most connections would do better in the course overall. Our analysis showed these interactions between learners improved grades.

Depending on how many learners are enrolled in a course, the data reveals a maximum metric of “connectedness” that individual learners can reach. This maximum can be used to compare the success of CrowdLearn with similar tools aimed at creating connected online class experiences at scale.

Q: Have you used other peer assessment tools in the past? How did they compare?

A: Learning management systems like Canvas provide similar functionalities as CrowdLearn, such as graded discussion forums and peer review-style assignments. I believe the key difference is CrowdLearn’s streamlined interface that guides learners from the peer review phase to an open discussion forum that allows learners to continue their conversations and contribute to others. This primes the learners to connect more with the assigned content and each other.

Q: How do you plan to use this knowledge to improve learner outcomes?

A: We still have more learner interaction data to analyze. Some areas we’d like to explore include:

- Upvote interactions: This analysis could help us define a separate learner class that primarily uses more passive interactions than comments and replies.

- Text from comments and replies: Sentiment analysis could tell us which types of messages lead to more responses and more network connections.

- Length of comments and replies: Filtering the quality of interactions may help us understand the strength of the various connections learners make within a network.

Excitingly, this analysis could help us program an algorithm for the discussion forum, similar to a chat bot. The goal is to nudge learners to interact with others who are discussing similar topics, submitting similar thoughts, or who even have diverging opinions. This concept is still new territory, so we don’t know what bounds there are to this trend.

How peer review enables better learning in online courses was originally published in MIT Open Learning on Medium, where people are continuing the conversation by highlighting and responding to this story.